All research is political

Trump Diaries Episode 3: Behind the scenes on a sensitive diary study

Flashback to three months ago

On the morning of Wednesday, November 9, I lingered with fellow researchers at the coffee station in a state of stunned silence. My colleagues can be a chatty bunch, but on that day, we were conspicuously quiet.

As residents of the big blue city of Chicago, we were feeling the repercussions of a sturdy bubble bursting. As voters, we were profoundly disoriented. The polls had been so erratic, so two-dimensional—and then so wrong.

Then someone said, “We need to launch a mission,” and our emotions re-oriented themselves around a dawning curiosity. Time for a research project! We weren’t sure exactly what we wanted to learn, but we knew we had a short window to connect with Americans as they too processed this unexpected shift in personal perception and national perspective.

But the politics! We don’t often undertake overtly political topics so were daunted by the politics of it all. Not only the sensitivity of the subject matter, but also of the participants, of our eventual stakeholders, and our own sensitivities. How to deal with our own biases while designing, fielding, analyzing and reporting on such a politicized subject?

Then it dawned on us that researchers deal with this kind of thing daily: Research is always political.

All research is political

Whether the politics are national, organizational or departmental, there’s always something at stake in a good research project, the potential to uncover something with unanticipated stakes for stakeholders whose positions may or may not be known and may or may not be entrenched.

The stakes can also be unanticipated and fraught for participants, with topics that create strong emotional reactions. Does the topic induce a desire for privacy, fear of judgment, social stigma or sanctions? Or do they perceive a subtle bias in the study and overcompensate to ensure their own entrenched positions are strongly represented?

With known or unknown stakes and sensitivities in play, researchers can feel tremendous pressure at all stages of a project, not just to get the research right but to get the right answer. While sensitive research topics aren’t usually so literally political, we’re no stranger to choosing our wording carefully, counterbalancing potential bias, and facilitating objective responses from our scouts. So we went for it.

Methodology

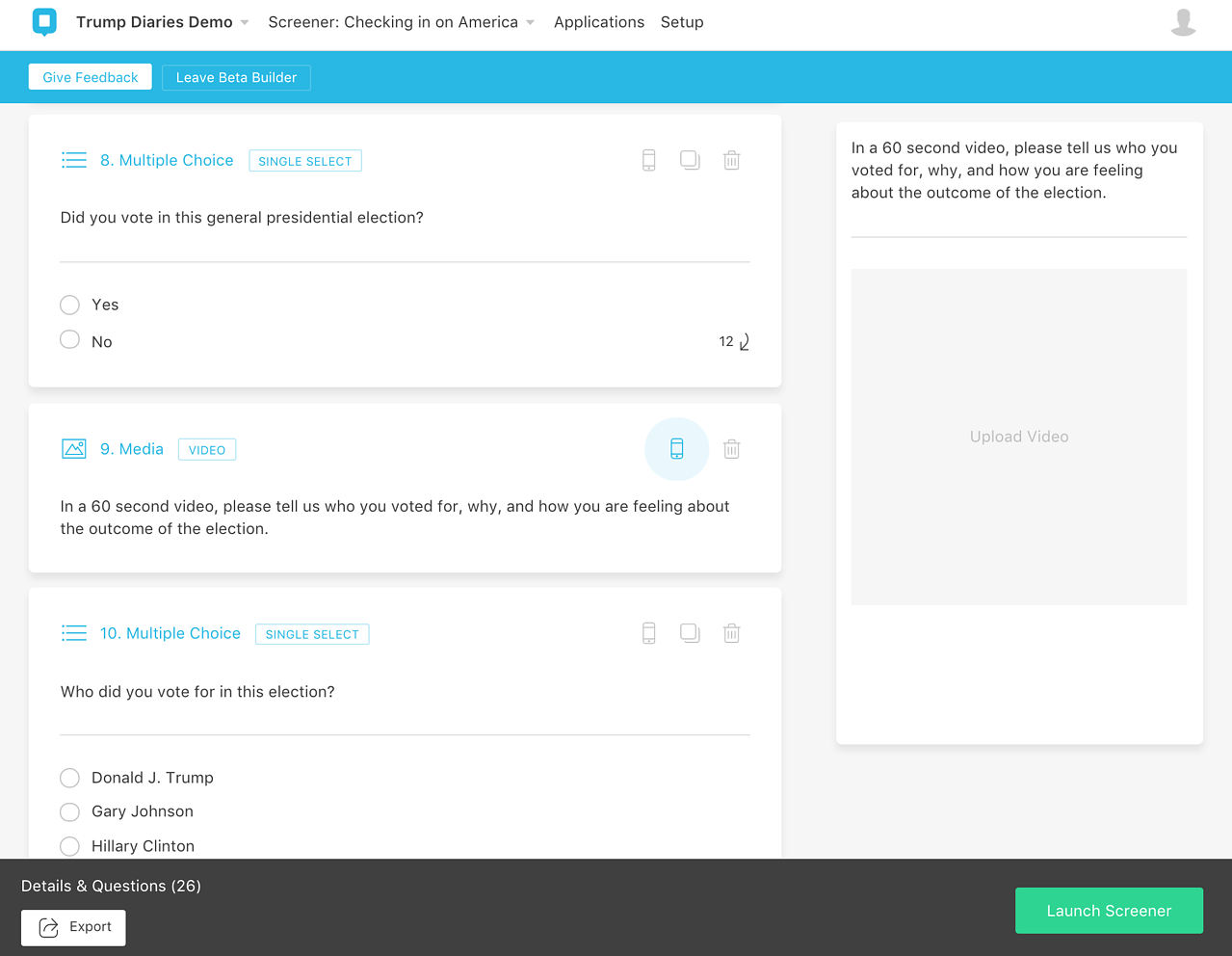

We designed our Trump Diaries project with strategies that we’ve developed while assisting and guiding our clients in running thousands of projects on the dscout platform. Before diving into the strategies themselves, here’s an overview of our methodology for context. Our full research design is also available for download. We’ve also published two other posts with our findings: Voters who broke the mold and Welcome to the Flashpoint Presidency.

5 Strategies for Managing Sensitive Research

1. Confront bias from the start

Recognize and discuss your bias and your stakeholders’ biases.

Whether or not your research is explicitly political, there’s often someone looking to influence the story. In this case, I had to acknowledge that that person was probably me. I’m a young, urban, feminist liberal daughter of an immigrant. My research partner is a queer-identified liberal. Neither of us feel much empathy for Trump voters, nor do we understand their motivations. So we got our biases on the table from the start. We identified the risk of alienating scouts, alienating stakeholders, or influencing the data. We discussed our personal hopes for the outcome of the research. By calling out where we selfishly wanted to see the story go, we had a roadmap to check ourselves if we started driving in that direction.

Don’t go it alone.

With our bias on the table, we were acutely aware that reinforcements would be needed to keep us neutral. While designing the study, we referenced language and best practices from other sources and organizations that specialize in political research. For example, when writing a question about our scouts’ top voting issues, we looked to the Pew Research Center for guidance. Throughout the project, we embraced bipartisan cooperation from a colleague, who not only holds many differing views from our own, but also ran a political research company. He reviewed our writing and framing every step of the way. He kept us honest, pointing out how even the ordering of the options in a multi-select question could reflect a subtle bias.

2. Be methodical about your methodology

Consider the benefits and limitations of various methodologies.

For sensitive research, be extra diligent about choosing the right methodology. Our bias is toward using the dscout platform, naturally. But we listed the advantages and disadvantages of it, as well as other methods to which we have access.

For instance, by its very nature as a mobile research platform, dscout is going to be challenged in reaching the elderly, low income voters and others without smartphones. In the advantage column however are speediness, privacy and the ability to catch participants in the moment:

Consider the likely human response of your sensitive topic.

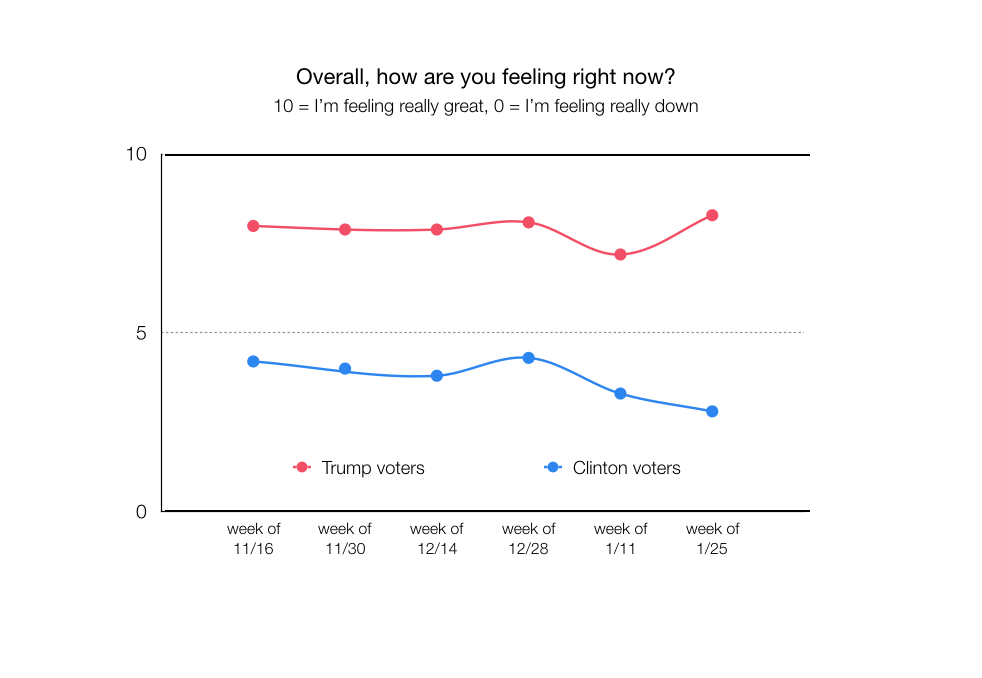

We designed our project with a longitudinal format capturing both “check-ins” and “moments” missions to follow the ebbs and flows of our scouts emotional journey. Frequency and repetition caught the blips but also allowed for some normalization of response over time. Then, by asking for a “reflection” in the final two weeks of participation, we saw which themes or events bubbled up as most resonant and compared those against the longitudinal missions.

3. Design for discovery

Start wide and let participants tell you what is important.

We framed the first two missions to be general with regard to their observations and feelings—no specific inquiries about policies or events. We monitored scout entries as they came in, so we could design a third mission to validate and quantify what we thought we were hearing. As we tagged videos, we noticed how many of our scouts' entries related to Trump’s use of social media. This observation suggested a series of specific closed-ended questions related to this traditional vs social media to help us quantify what we observed in the open-ended responses. And since scouts were so engaged, we were able to get 100 responses to that last mission in a little over 24 hours.

Screen for reflective, passionate and articulate scouts.

Our screener included questions to help us gauge a scout’s ability to articulate his or her opinions with nuance. Capturing a 60 second video clip from scouts lets us hear them develop and express their thoughts and emotions. We also use open-ended text questions to screen for thoughtfulness. The question “If you had to describe your vision for the country in a few sentences, what would you say?” was particularly helpful in findings scouts with well articulated perspectives. There’s a certain amount of “casting” in any qualitative study. For a sensitive topic like the emotions around a voting decision, it’s even more critical to find participants who can speak directly to your project’s audience.

Seek out voices from the middle.

The passion of scouts on one extreme or the other can make for great video and provide a lot of fodder for analysis, but we also made sure to seek out less polarized opinions. These came from people who conveyed a likelihood of questioning, considering and adding layers to the discussion.

4. Take good care of your scouts

Address privacy concerns head on.

Your scouts may be sensitive about what you’re using the data for. Be honest and upfront, allowing them to opt out if they don't want their submissions publicly available. Whenever a scout expressed discomfort or requested confidentiality, we assured them their entries would be private and asked them to continue.

Create a bond.

In addition to feeling vulnerable, scouts may wonder if they are talking about the right thing. It was important to assure them they were doing well and that there were no “right” answers. We sent messages to keep scouts feeling engaged and encouraged. And although a handful of us worked on this project, we were purposeful in having only one researcher communicating with the participants, sending messages, reminders, and encouragement. Hearing from the same researcher over time makes scouts less likely to get self-conscious about how many people were reading their entries.

5. Find a story that has bite without bias

Follow the numbers.

Open-ended discovery offered many roads to pursue. But even in regular qualitative research, your storyline should be supported by many responses, not just an anecdote or two.

So for example, by tagging each entry we were able to observe which social media flashpoints were most resonant for people across the political spectrum. In a similar sense, by asking for sentiment ratings with each check-in entry we could easily monitor trends throughout the three month study.

It’s always challenging to quantify qualitative responses. One technique we love is asking for “three word” responses: “How are you feeling about the outcome of the election? Please list three words that capture your current emotions.” With text analysis, this question teed up common themes and made it easy to compare them across subgroups in the study.

Have a POV without turning off your audience.

Before we published our first article in the Trump Diaries series we asked ourselves: Who is our audience? What do they need or want? What will make them pay attention? Within the few weeks after the election we received more than a handful of Trump-related research requests from clients and prospective clients. Many of these inquiries were as general as “we want to understand Middle America” or “we want to see a day in the life of a Trump voter”. We know that many of our readers are younger, urban, educated and lean left politically.

With these insights in mind we set out to find the right opening article for the Trump Diaries series. Our objective was to publish something relevant, qualitative and the right balance of thought-provoking and self-evident. Relevant meaning we serve this curiosity about a certain side of the country. Qualitative because knowing our own disillusionment with polls and traditional measures, we were compelled to stick with our bread and butter. And lastly, finding the right balance of content that is thought-provoking enough to be new and interesting but self-evident enough that it doesn’t require much spin or editorializing by us.

Share the microphone.

When presenting or reporting on sensitive topics, don’t be the only voice in the room. Designers and decision makers can only hear it from you so often. Let them hear it from the user—or in this case, the voter—directly.

While the quantification of our entries helped us check our bias and validate our story, the videos and quotes were also a huge asset. We incorporated scout videos, audio recordings and verbatims into the story to create empathy and context.

The Stakes of Sensitive Research

This project was a challenge. It pushed us to drill on the fundamentals and flex new research muscles altogether. We had to hunt down liabilities in our research approach to counterbalance sensitivities. And we had to come face to face with our own shortcomings as biased humans in order to be neutral researchers.

Yet as novel as the subject matter and particular sensitivities in this project, the challenges are common in so much good research: It’s political. There are stakes. And while as Americans we might wish for less high stakes politics in our lives right now, I personally don’t wish for lower stakes in our work as researchers.

Jaymie Wahlen is the VP of Customer Success at dscout, where she works to make dscout the most customer-centric company on the block. When she’s not leading dscout’s team of Research Advisors, you can find Jaymie spinning a pottery wheel, or seeking out the world’s best street food.

Subscribe To People Nerds

A weekly roundup of interviews, pro tips and original research designed for people who are interested in people