Topics

February 25, 2025

February 25, 2025

For the last four years, I've been building health interventions—helping people stress less, sleep more, and so much more. Recently, I have been working on a tool that uses generative AI.

Headspace started as a mindfulness and meditation app but has grown to become much more. We offer coaching, therapy, psychiatry services, and digital content to support someone's mental health journey.

This project is our first member-facing AI experience. Being a mission-driven healthcare company, you can imagine how using AI within the sensitive context of mental health can make many people uncomfortable.

As we were getting started, there were a lot of strong feelings about using AI. On one hand, stakeholders were very excited about AI and how it could scale access to mental health tools globally.

On the other hand, there was a lot of skepticism and nervousness about how AI could be detrimental to someone's mental health if not designed right because mental health is inherently so vulnerable.

We learned that this nervous sentiment was also present in our members. We conducted a large-scale study to understand the desirability and attitudes towards AI, and while there was a high degree of trust in Headspace, there was also a lot of skepticism around using AI in mental health.

One interesting insight with many AI features was that people needed to interact with the technology to better understand it.

After using it, people trusted it more. This was seen specifically with ChatGPT. People who used it had more positive attitudes towards it, so we had to encourage people to try the experience, to trust it and reap any health benefits from the experience.

It all came down to, what can we do as designers to help our members feel safe about interacting with AI? And as a mental health company that is passionate about and invested in improving people’s mental health, how do we keep our members safe from any unintended consequences of AI.

The first step was to find a focused and valuable use case that balanced all of these factors. This might seem a little familiar to what Nicole shared with the desirability, viability, and feasibility matrix. Ours has a few additions.

Firstly, it had to be something that people wanted and could help them in their lives. Through a lot of generative research that we've done with Dscout over the years, one of the things that kept coming up is how people have a reflection practice outside of Headspace. That's a capability that our app did not support.

Through clinical best practices, we know that reflection can be beneficial and supportive of one's mental health. It gives one clarity, increases a sense of self-efficacy, and it can empower people to take more agency in their own journey. Overall, it benefits one's well-being.

We had to also consider the needs of our ecosystem of services as a whole. In our ecosystem, as I mentioned, we also have human care providers. So how does reflection support a member's experience with their care provider? It turns out reflection is very supportive and complementary to seeing a human provider and is often recommended in the form of activities within a member care plan.

This use case around reflection became a safe container in the larger context of mental health for us to leverage the power of generative AI and specifically conversational AI to understand someone's unique context and encourage deep reflection.

This led us to build Ebb, a guided reflection experience that uses conversational AI. Ebb is your compassionate companion. It creates a safe space for you to express yourself. It asks you open-ended, deep, reflective questions with the sole purpose of helping you be more self-aware and offers non-judgmental support along the way.

Now we have a solid use case people would likely benefit from, but we had to address people's barriers around not feeling safe engaging with AI.

This is where (through the upfront experience and research that we had done) we realized that we needed to emphasize that Ebb is built by clinical experts. The more we elevate that early in the experience, we increase feelings of trust for people.

People were concerned about their privacy—so this one was delicate. How much do you talk about data and privacy up front? You don't want to overwhelm people, right? So we had to strike the right balance.

We still needed to be human and use layman's language in terms of how the data is handled while providing deep dives if people want to go deeper.

We also took the opportunity with this upfront experience to frame expectations and influence how people would safely engage with the AI. Doubling down on the core purpose of this experience—which is to reflect—we also highlighted the limitations and that Ebb should not be seen as a replacement for human care. We tried to influence people's actions and behaviors with AI itself.

Now the next step was to really nail that first reflective conversation and interaction with Ebb, so people keep coming back. We adopted an iterative approach to refining the conversational experience where research had a big role to play. A huge shout out to Co-Lab last year and the folks from Duolingo who helped influence our approach.

We conducted…

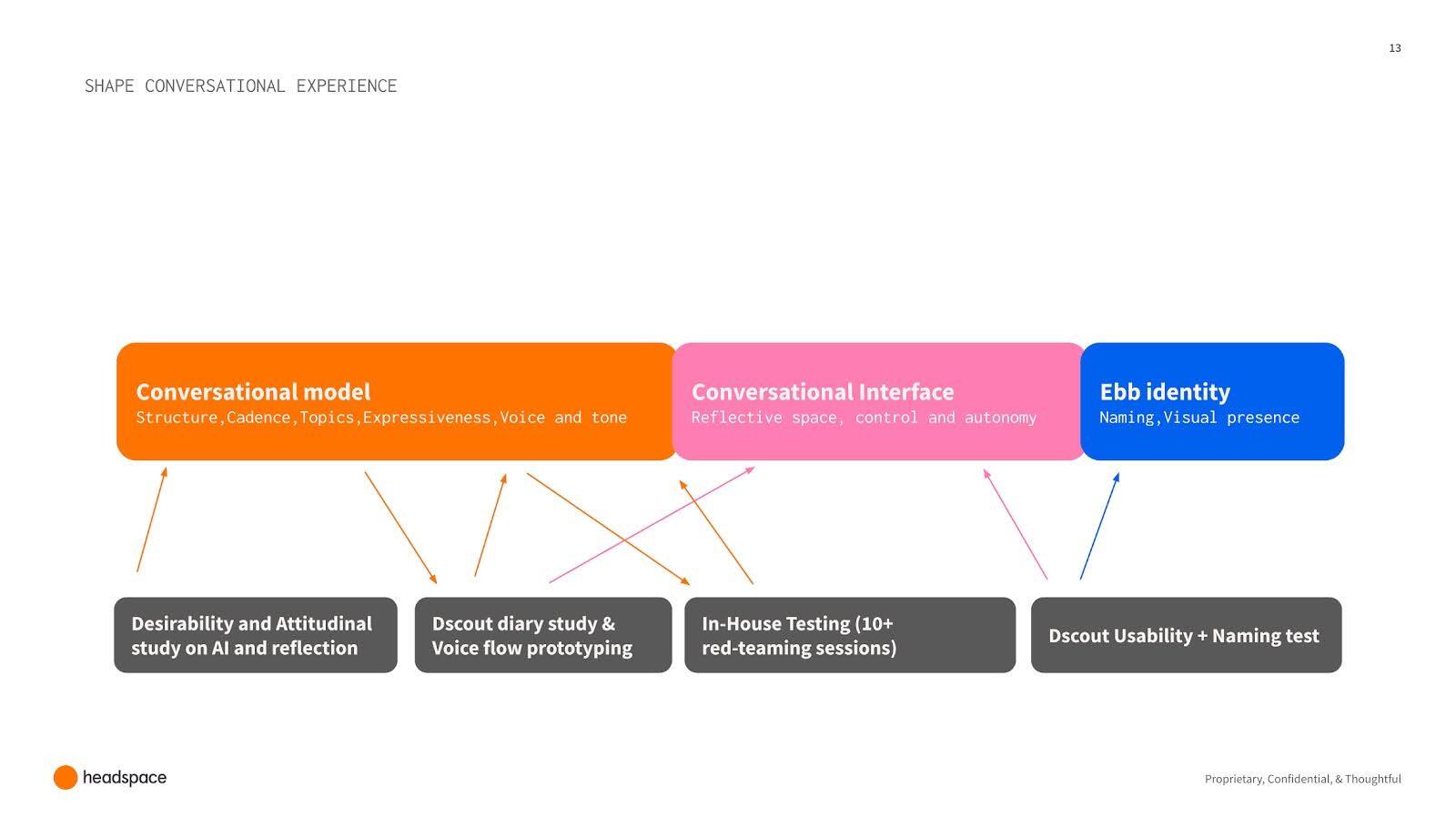

The feedback loops between the conversation design and these methods- the Desirability and Attitudinal study on AI, Diary studies and voice flow prototyping and finally several rounds of internal red teaming sessions helped our conversational designers hone the structure, cadence of the conversation, fine tune the voice and tone of Ebb.

We also used two rounds of concept testing and usability tests to inform the design of the conversational interface and Ebb’s identity, all towards supporting safe and deep reflection for our members.

So how did this all come together in the experience? Let’s come back to the notion of safety.

One of the ways we helped members feel safe within the experience was to elevate their choice, control, and autonomy.

Members have the option to reflect through processing thoughts and emotions. That's inherently more open-ended and conversational, so people could get deep and expressive with Ebb. Ebb guides them gently or asks them deeper reflective questions to go deep with their thoughts.

On the other end, you have Finding gratitude. This is more closed-ended and less conversational. It gives people the chance to have a list/button-based experience, so you don't have to feel the pressure to write walls of text to reflect on something. It’s about giving people the choice to be as open, expressive,and vulnerable as they would like to be.

In order to encourage quality reflection and free expression, we designed the conversational experience to feel open and calm but then also give people clear boundaries to keep them safe. For example, giving people the chance to end or delete a conversation anytime if things get sensitive.

It’s also important to note that Ebb never offers you advice as a coach or a therapist would and waits for your signal if you ever want suggestions with activities from our content library. Ebb, powered by safety detection mechanisms, is constantly sensing if your needs are escalated in any way, it will gently guide you to crisis resources or a provider.

Last but not least, I think shaping the persona of Ebb was important to create that sense of safety. We married psychology best practices and brand guidelines to shape the persona, voice, and approach of Ebb, and how it communicates with you.

We also shaped its visual presence to be gentle, hopeful, and compassionate. There’s lots of power here for design to evoke a certain feeling with AI.

Ebb is currently in pilot and we are learning a ton. One of the key questions was, “Is it performing its intended purpose?” The short answer is yes. People are reflecting on a wide range of topics, and it's quite humbling to see the range of topics that Ebb can support.

People are saying they feel validated and seen. They are calling out some of the humanistic abilities of Ebb. They say that it's kind, intelligent, and sometimes funny. Some even said that they wished more people in their lives would be like Ebb. Overall we’ve gotten a lot of positive feedback so far.

We're also seeing a lot of repeat engagement, which could be a signal of increased trust with interest and value in the future.

One thing that we were nervous about was that because the views around AI within mental health can be so polarizing, would this feature have a negative effect on our subscriptions? So far, we haven’t seen any negative effects in subscriptions or even engagement/retention metrics.

There's so much to learn here. I'm personally curious about how much the novelty factor of AI comes in, and if we do see some dip in engagement over time. On the other hand as Ebb continues to be a part of people's lives, how does it actually help people reflect more deeply and form deeper connections?

To leave you with a few takeaways…

When it comes to attitudes towards AI, in some way AI is fully mainstream—it's pervasive. Everybody's using it in some ways, right? But people have varying opinions on AI based on the context. Someone could be okay with their calendars being optimized by AI, but on the other hand, sharing intimate thoughts with AI could make someone uncomfortable.

Take the time to understand the attitudes towards AI, both for your users and also your stakeholders, and use that learning to shape the process and the approach especially if you sense skepticism.

In order to garner more internal support for our work, we dropped ongoing learnings and little member anecdotes from the field, into our Slack channels. This gave people insight into our process and that we were being very mindful about how we are building these experiences.

Over time as we started developing conversational prototypes, enabling our key internal stakeholders to test the experience themselves, really helped shift their perspectives about AI and Ebb.

We also ran large-scale testing through internal red teaming, so inviting all employees in the organization to use it, test the vulnerabilities, and help us make this better. This helped us demystify the application of AI and gain broad buy in the organization. This is vital to building quality, ethical experiences and doing so quickly to continue to stay competitive in the marketplace.

It is so important to consider the experiences of underrepresented communities right from inception. Upfront research with these groups can help you set the right foundation. As you start to prototype some of these AI experiences, the inherent unpredictability of how AI is going to respond reveals some risks for users.

With that in mind, you have to be conscious about how you test these and for us, a safe way to test them is to partner with our internal identity and experience-focused employee resource groups. They brought in this identity and minority perspective to shape and strengthen the models and the experiences themselves.

I want to close with a reflection on gratitude. Recently, I became a parent, and I often hear the saying, "It takes a village to raise a child." I’ve come to realize that the same is true for building something meaningful—like Ebb. It has taken a village—a community of immensely talented and caring people—to create an AI product that is valuable, empathetic, and safe. I am deeply grateful to this team and to all of you for fostering a space where I can share my experience and perspective on building Ebb.